RESEARCH

The Center for an Informed Public is an interdisciplinary research initiative at the University of Washington that helps individuals, communities and institutions navigate our complex information environments.

Being Sensemakers: A Framework for University-Based Rapid Research of Elections, Crisis Events, and Beyond

The CIP’s 2024 Election Rumor Research Project (ERRP), which pursued a kind of research that often runs counter to traditional academic norms and incentives: it moves fast, responds to urgent public information needs in real time, spans and integrates multiple disciplines and prioritizes public impact over publication in peer-reviewed journals. This work produces research at speed while still using peer-reviewed methodologies, providing valuable educational experiences for students and research when it is needed most.

CIP researchers present 6 papers and workshop at 2025 CSCW conference in Norway

In October 2025 at the 28th ACM SIGCHI Conference on Computer-Supported Cooperative Work & Social Computing (CSCW) in Bergen, Norway, CIP-affiliated researchers facilitated one conference workshop and presented 6 papers, including one that received a Best Paper award.

Alien of the gaps: How 3I/ATLAS comet was turned into a spaceship online

When we reach the frontier of current knowledge, we’re tempted to insert a higher power into the space where answers aren’t yet satisfying for all, CIP postdoctoral scholar Mert Can Bayar wrote in December 2025 about the 3I/ATLAS interstellar comet.

Social media research tool can reduce polarization — it could also lead to more user control over algorithms

In a November 2025 study published in Science, CIP faculty member Martin Saveski, a UW Information School assistant professor, and co-authors from Stanford University and Northeastern University, detail how a new tool shows it is possible to turn down the partisan rancor in an X feed.

The persuasive potential of AI-paraphrased information at scale

In a July 2025 PNAS Nexus journal article, the CIP’s Saloni Dash, Yiwei Xu, Madeline Jalbert, and Emma Spiro explore how AI-paraphrased messages have the potential to amplify the persuasive impact and scale of information campaigns.

2025 Publications

2025 Publications List

Explore a list of peer-reviewed articles and other contributions published in 2025 authored or co-authored by faculty members, research scientists and fellows, postdoctoral scholars, doctoral and other students affiliated affiliated with the CIP.

The conspiracist theory of power

In an article published in Politics and Governance, Mert Can Bayar and Scott Radnitz argue that conspiracism is not merely a critique of power, but an intellectual foundation for a political regime embodied in autocracy, oligarchy, or an alternate technocracy. This analysis informs our understanding of a serious yet under‐theorized threat to liberal democracy. Read the article.

The end of trust and safety?: Examining the future of content moderation and upheavals in professional online safety efforts Here

Through in-depth interviews with trust and safety professionals, this paper published in Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems and co-authored by Rachel Moran Prestridge, Joseph S. Schafer, Mert Can Bayar and Kate Starbird explores upheavals within the T&S industry, examining current perspectives of content moderation and broader strategies for maintaining safe digital environments. Read the paper.

ElectionRumors2022: A dataset of election rumors on Twitter during the 2022 U.S. midterms

In the Journal of Quantitative Description: Digital Media, Joseph S. Schafer, Kayla Duskin, Stephen Prochaska, Morgan Wack, Anna Beers, Lia Bozarth, Taylor Agajanian, Michael Caulfield, Emma S. Spiro and Kate Starbird present and analyze a dataset of 1.81 million Twitter posts corresponding to 135 distinct rumors which spread online during the midterm election season (September 5 to December 1, 2022). They describe how this data was collected, compiled, and supplemented, and provide a series of exploratory analyses along with comparisons to a previously published dataset on 2020 election rumors. Read the article.

The persuasive potential of AI-paraphrased information at scale

In PNAS Nexus, Saloni Dash, Yiwei Xu, Madeline Jalbert and Emma Spiro study how AI-paraphrased messages have the potential to amplify the persuasive impact and scale of information campaigns. Building from social and cognitive theories on repetition and information processing, we model how CopyPasta — a common repetition tactic leveraged by information campaigns — can be enhanced using large language models. Read the article.

Podcasts in the periphery: Tracing guest trajectories in political podcasts

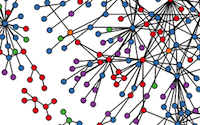

In this paper published in Social Networks, Sydney DeMets and Emma Spiro construct a bipartite network of podcasts and their invited guests. We then generate a network of paths that guests take as they move from one podcast to the next using entailment analysis, and evaluate if guests are typically invited to speak on less prominent shows first, before moving on to more prominent shows. Read the article.

2024 Publications

2024 Publications List

Explore a list of peer-reviewed articles and other contributions published in 2024 authored or co-authored by faculty members, research scientists and fellows, postdoctoral scholars, doctoral and other students affiliated with the CIP.

Echo chambers in the age of algorithms: An audit of Twitter’s friend recommender system

In the Proceedings of the 16th ACM Web Science Conference, Kayla Duskin, Joseph S. Schafer, Jevin D. West, and Emma S. Spiro conducted an algorithmic audit of Twitter’s Who-To-Follow friend recommendation system, the first empirical audit that investigates the impact of this algorithm in-situ. Read the article.

Governance capture in a self-governing community: A qualitative comparison of the Croatian, Serbian, Bosnian, and Serbo-Croatian Wikipedias

In the Proceedings of the ACM on Human-Computer Interaction, Zarine Kharazian, Kate Starbird, and Benjamin Mako Hill use a grounded theory analysis of interviews with members of Serbian and Croatian Wikipedia communities and others in cross-functional platform-level roles. They propose that the convergence of three features — high perceived value as a target, limited early bureaucratic openness, and a preference for personalistic, informal forms of organization over formal ones — produced a window of opportunity for governance capture on Croatian Wikipedia. Read the article.

American academic freedom is in peril

In a Science editorial, Ryan Calo and Kate Starbird write: “Academics researching online misinformation in the US are learning a hard lesson: Academic freedom cannot be taken for granted. They face a concerted effort—including by members of Congress—to undermine or silence their work documenting false and misleading internet content. The claim is that online misinformation researchers are trying to silence conservative voices. The evidence suggests just the opposite.” Read the editorial.

Understanding the discourse of the Black Manosphere on YouTube

in the Companion Publication of the 2024 Conference on Computer-Supported Cooperative Work and Social Computing, Adiza Awwal uses Critical Technocultural Discourse Analysis (CTDA) and virtual ethnography on prominent misogynistic content creators on YouTube who cater to Black males and analyzes the discursive themes to assess this as a culturally significant and racially delineated digital phenomenon. Read the article.

On measuring change in networked publics: a case study of United States election publics on Twitter from 2020 to 2022

In Information, Communication & Society, Anna Beers proposes a tri-factor model for measuring change in publics centered on creators, audiences, and platforms, and demonstrate this model on a case study of United States ‘networked election publics’ on Twitter from 2020 to 2022. An analysis of over 600M posts from 2020 to 2022, Beers finds that right-leaning creators and audiences decreased in their activity relative to left-leaning publics in 2022, and faced increased platform moderation via suspensions and shadowbanning. Read the article.

2023 Publications

2023 Publications List

Explore a list of peer-reviewed articles and other contributions published in 2023 authored or co-authored by faculty members, research scientists and fellows, postdoctoral scholars, doctoral and other students affiliated with the CIP.

Rumors have rules

In Issues in Science and Technology (and ), Emma Spiro and Kate Starbird explore how decades-old research about how and why people share rumors is even more relevant today in a world with social media. In their article, Spiro and Starbird revisit the 1954 “Seattle windshield pitting epidemic,” an event where residents in Western Washington reported finding unexplained pits, holes and other damage in their car windshields, leading to wide speculation about the cause. It’s “a textbook example of how rumors propagate: a sort of contagion, spread through social networks, shifting how people perceive patterns and interpret anomalies.” Read the article in Issues in Science and Technology or an excerpt in The Seattle Times.)

Influence and improvisation: Participatory disinformation during the 2020 U.S. election

in Social Media + Society’s Special Issue on Political Influencers, Kate Starbird (with Stanford co-authors Reneé DiResta and Matt DeButts) examine efforts during the 2020 US. election to spread a false meta-narrative of widespread voter fraud as a domestic and participatory disinformation campaign in which a variety of influencers — including hyperpartisan media and political operatives — worked alongside ordinary people to produce and amplify misleading claims, often unwittingly. To better understand the nature of participatory disinformation, the co-authors examine three cases of misleading claims of voter fraud, applying an interpretive, mixed method approach to the analysis of social media data. Read the article.

Navigating information-seeking in conspiratorial waters: Anti-trafficking advocacy and education post QAnon

In Proceedings of the AMC on Human-Computing Interaction, Rachel E. Moran, Stephen Prochaska, Izzi Grasso and Isabelle Schlegel use in-depth interviews with members of the public and professionals involved in anti-trafficking activism to explore how individuals find trustworthy information about human trafficking in light of the public spread of misinformation. Their findings highlight the centrality of distrust as a driving force behind information-seeking on social media. Further, the co-authors highlight the tensions that arise from using social media as a primary resource within anti-trafficking education and the limitations of interventions to slow the spread of trafficking-related misinformation. Read the article.

Mobilizing manufactured reality: How participatory disinformation shaped deep stories to catalyze action during the 2020 U.S. presidential electione

In Proceedings of the ACM on Human-Computer Interaction, Stephen Prochaska, Kayla Duskin, Zarine Kharazian, Carly Minow, Stephanie Blucker, Sylvie Venuto, Jevin D. West, and Kate Starbird present three primary contributions: (1) a framework for understanding the interaction between participatory disinformation and informal and tactical mobilization; (2) three case studies from the 2020 U.S. election analyzed using detailed temporal, content, and thematic analysis; and (3) a qualitative coding scheme for understanding how digital disinformation functions to mobilize online audiences. The co-authors combine resource mobilization theory with previous work examining participatory disinformation campaigns and “deep stories” to show how false or misleading information functioned to mobilize online audiences before, during, and after election day.

Sending news back home: Misinformation lost in transnational social networks

In Proceedings of the AMC on Human-Computing Interaction, Rachel E. Moran, Sarah Nguyễn, and Linh Bui used qualitative coding of social media data and a thematic inductive analysis inspired approach to investigate how misinformation has proliferated through social media sites, such as Facebook, and the types of informational content, and specific misinformation narratives, that spread across the Vietnamese diasporic community during the 2020 U.S. Informed by the work of organizations such as Viet Fact Check, The Interpreter, and other community-led initiatives working to provide fact-checking and online media analysis in Vietnamese and English, the co-authors present and discuss salient misinformation narratives that spread throughout Vietnamese diasporic Facebook posts, fact-checking and misleading information patterns, and inter-platform networking activities. Read the article.

Post-spotlight posts: The impact of sudden social media attention on account behavior

In the Companion Publication of the 2023 Conference on Computer Supported Cooperative Work and Social Computing, Joseph S. Schafer and Kate Starbird use a statistical analysis to examine the impacts that this outsized attention has on the subsequent behavior of accounts who received sudden social media attention for Twitter posts in the early stages of the Covid-19 pandemic. They find that accounts that received sudden social media attention were more likely to post after receiving sudden social media attention, though this effect was not persistent and did not boost original content production. The co-authors also find that these accounts were significantly more likely to alter their self-presentation through updating their “bio” after receiving suddenly increased attention. Read the article.

2022 Publications

2022 Publications List

Explore a list of peer-reviewed articles and other contributions published in 2022 authored or co-authored by faculty members, research scientists and fellows, postdoctoral scholars, doctoral and other students affiliated with the CIP.

Auditing Google's search headlines as a potential gateway to misleading content: Evidence from the 2020 U.S. election

In the Journal of Online Trust and Safety, a team of CIP-affiliated researchers, co-led by Himanshu Zade and Morgan Wack with co-authors Yuanrui Zhang, Kate Starbird, Ryan Calo, Jason Young, and Jevin D. West, examine Google search results pages which contained a disproportionate amount of undermining-trust content when compared to alternative SERP verticals (search results, stories, and advertisements).” The researchers found that “video headlines served to be a notable pathway to content with the potential to undermine trust.” Read the Journal of Online Trust and Safety article. Read more in Tech Policy Press.

Combining interventions to reduce the spread of viral misinformation

In Nature Human Behavior, Joe Bak-Coleman, Ian Kennedy, Morgan Wack, Andrew Beers, Joseph S. Schafer, Emma Spiro, Kate Starbird, and Jevin West provide a framework to evaluate interventions aimed at reducing viral misinformation online, both in isolation and when used in combination. The CIP team of researchers derive a generative model of viral misinformation spread, inspired by research on infectious disease and by applying this model to a large corpus (10.5 million tweets) of misinformation events that occurred during the 2020 U.S. election, they reveal that commonly proposed interventions are unlikely to be effective in isolation. However, our framework demonstrates that a combined approach can achieve a substantial reduction in the prevalence of misinformation. Read the article.

Community-based strategies for combating misinformation: Learning from a popular culture fandom

Through a study using a virtual ethnography and semi-structured interviews of 34 Twitter users from the ARMY fandom, a global fan community supporting the Korean music group BTS, Jin Ha Lee and Emma Spiro (with Nicole Santero, Arpita Bhattacharya, and Emma May) examine the effectiveness of fandom communities in reducing the impact and spread of misinformation within them. “ARMY fandom exemplifies community-based, grassroot efforts to sustainably combat misinformation and build collective resilience to misinformation at the community level, offering a model for others,” the researchers wrote in a Harvard Kennedy School Misinformation Review article.

Bridging contextual and methodological gaps on the 'misinformation beat': Insights from journalist-researcher collaborations at speed

In the Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, Melinda McClure Haughey, Martina Polovo and Kate Starbird present an ethnographic study of 30 collaborations, including participant-observation and interviews with journalists and researchers, identify five types of collaborations and describe what motivates journalists to reach out to researchers — from a lack of access to data to support for understanding misinformation context. Read the article.

'Studying mis- and disinformation in Asian diasporic communities: The need for critical transnational research beyond Anglocentrism'

In a Havard Kennedy School Misinformation Review commentary, Sarah Nguyen and Rachel E. Moran (with UNC Chapel Hill co-authors) use examples of case studies from Vietnam, Taiwan, China, and India and discuss research themes and challenges including legacies of multiple imperialisms, nationalisms, and geopolitical tensions as root causes of mis- and disinformation; difficulties in data collection due to private and closed information networks, language translation and interpretation; and transnational dimensions of information infrastructures and media platforms. This commentary introduces key concepts driven by methodological approaches to better study diasporic information networks beyond the dominance of Anglocentrism in existing mis- and disinformation studies. Read the commentary.

2021 Publications

2021 Publications List

Explore a list of peer-reviewed articles and other contributions published in 2022 authored or co-authored by faculty members, research scientists and fellows, postdoctoral scholars, doctoral and other students affiliated with the CIP.

Stewardship of global collective behavior

In the Proceedings of the National Academy of Sciences, a multidisciplinary group of researchers, including the CIP’s Joe Bak-Coleman, Carl Bergstrom and Rachel E. Moran, says that the study of collective behavior must rise to a “crisis discipline,” just like medicine, conservation and climate science have done. Our ability to confront global crises, from pandemics to climate change, depends on how we interact and share information. Social media and other communication technology restructure these interactions in ways that have consequences. Unfortunately, we have little insight into whether these changes will bring about a healthy, sustainable and equitable world. Read the PNAS article. Learn more about the paper in a CIP blog post.

How do you solve a problem like misinformation?

To understand the dynamics and complexities of misinformation, it’s vital to understand three key distinctions — misinformation vs. disinformation, speech vs. action and mistaken belief vs. conviction — CIP co-founders Ryan Calo, Chris Coward, Emma Spiro, Kate Starbird and Jevin West, write in a Science Advances essay that failing to recognize these distinctions can “lead to unproductive dead ends” while understanding them is “the first step toward recognizing misinformation and hopefully addressing it.” Read the essay in Science Advances. Learn more about the essay in a CIP blog post.

Misinformation in and about science

In the Proceedings of the National Academies of Science, Jevin West and Carl Bergstrom write that ”[a]ppealing as it may be to view science as occupying a privileged epistemic position, scientific communication has fallen victim to the ill effects of an attention economy. This is not to say that science is broken. Far from it. Science is the greatest of human inventions for understanding our world, and it functions remarkably well despite these challenges. Still, scientists compete for eyeballs just as journalists do.” Read the PNAS article. Learn more about the paper in a CIP blog post.

Problematic political advertising on news and media websites around the 2020 U.S. elections

In the Proceedings of the 21st ACM Internet Measurement Conference, UW Paul G. Allen School of Computer Science & Engineering doctoral students Eric Zeng and Miranda Wei, Allen School undergraduate research student Theo Gregersen, Allen School professor Tadayoshi Kohno, and CIP faculty and Allen School associate professor Franziska Roesner collected and analyzed 1.4 million online ads on 745 news and media websites from six cities in the U.S. The finding reveals the widespread use of problematic tactics in political ads, the use of political controversy for clickbait, and the more frequent occurrence of political ads on highly partisan news websites. Read the article.

Addressing the root of vaccine hesitancy during the COVID-19 pandemic

In XRDS: Crossroads, The ACM Magazine for Students, Kolina Koltai, Rachel E. Moran and Izzi Grasso write that the “rise of vaccine hesitancy worldwide cannot be only attributed to the pandemic itself. While certainly, prominent anti-vaccination leaders have maximized their messages with social media and the chaos of the pandemic amplified their narratives, widespread vaccine hesitancy was always something that was a possibility. This is because vaccine misinformation and vaccine hesitancy are rooted in larger socio-ecological issues in society and conspiratorial thinking.” Read the paper.

2020: Peer-reviewed publications

- Jason C. Young, Brandyn Boyd, Katia Yefimova, Stacey Wedlake and Chris Coward. “The role of libraries in misinformation programming: a research agenda.” Journal of Libraianship and Information Science. (2020) https://doi.org/10.1177/0961000620966650

- Melinda McClure-Haughey, Meena Devii Muralikuma, Cameron A. Wood and Kate Starbird. Proceedings of the ACM on Human-Computer Interaction, Volume 4, Issue CSCW2, (2020) Article No.: 133 pp 1–22 https://doi.org/10.1145/3415204

- Rachel E. Moran. “Subscribing to transparency: Trust building within virtual newsrooms on Slack.” Journalism Practice. (2020) https://doi.org/10.1080/17512786.2020.1778507

- Carl T. Bergstrom, Jevin D. West. Calling Bullshit: The Art of Skepticism in a Data-Driven World, Penguin Random House (2020)

-

Rachel E. Moran and Nikki Usher. “Objects of journalism, revised: Rethinking materiality in journalism studies through emotion, culture and ‘unexpected objects.’” Journalism. (2020)

Other Publications

- Carl T. Bergstrom and Joseph B. Bak-Coleman. “Information gerrymandering in social networks skews collective decision-making.” Nature. (2019): 40-41. https://www.nature.com/articles/d41586-019-02562-z

- Kate Starbird, Ahmer Arif, and Tom Wilson. “Disinformation as Collaborative Work: Surfacing the Participatory Nature of Strategic Information Operations.” Proceedings of the ACM on Human-Computer Interaction CI. 3, Computer-Supported Cooperative Work (CSCW 2019). Article 127. https://doi.org/10.1145/3359229 http://faculty.washington.edu/kstarbi/StarbirdArifWilson_DisinformationasCollaborativeWork-CameraReady-Preprint.pdf

- Rachel E. Moran and TJ Billard. “Imagining Resistance to Trump Through the Networked Branding of the National Park Service.” Popular Culture and Civic Imagination. (2019)

- Peter M. Krafft, Emma S. Spiro. “Keeping Rumors in Proportion: Managing Uncertainty in Rumor Systems,” Proceedings of the 2019 CHI Conference on Human Computer Interaction (2019) https://dl.acm.org/doi/fullHtml/10.1145/3290605.3300876

- Coward, C., McClay, C., Garrido, M. (2018). Public libraries as platforms for civic engagement. Seattle: Technology & Social Change Group, University of Washington Information School.

- Ahmer Arif, Leo G. Stewart, and Kate Starbird. (2018). Acting the Part: Examining Information Operations within #BlackLivesMatter Discourse. PACMHCI. 2, Computer-Supported Cooperative Work (CSCW 2018). Article 20.https://faculty.washington.edu/kstarbi/BLM-IRA-Camera-Ready.pdf

- Philip N. Howard, Samuel Woolley and Ryan Calo (2018) Algorithms, bots, and political communication in the US 2016 election: The challenge of automated political communication for election law and administration, Journal of Information Technology & Politics, 15:2, 81-93, DOI: 10.1080/19331681.2018.1448735

- H. Piwowar, J. Priem, V. Larivière, J.P. Alperin, L. Matthias, B. Norlander, A. Farley, J.D. West, S. Haustein. (2018) “The state of OA: a large-scale analysis of the prevalence and impact of Open Access articles.” PeerJ 6: e4375.

- Carl T. Bergstrom, and Jevin D. West. (2018) “Why scatter plots suggest causality, and what we can do about it.” arXiv preprint arXiv:1809.09328

- Madeline Lamo & Ryan Calo (2018) Regulating Bot Speech. UCLA Law Review. https://www.uclalawreview.org/regulating-bot-speech/

- L. Kim, J.H. Portenoy, J.D. West, Katherine W. Stovel. (2018). Scientific Journals Still Matter in the Era of Academic Search Engines and Preprint Archives. Journal of the American Society for Information Science & Technology. (in press)

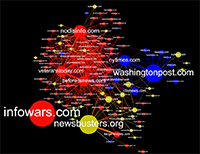

- Kate Starbird, Ahmer Arif, Tom Wilson, Katherine Van Koevering, Katya Yefimova, and Daniel Scarnecchia. (2018). “Ecosystem or Echo-System? Exploring Content Sharing across Alternative Media Domains.” Presented at 12th International AAAI Conference on Web and Social Media (ICWSM 2018), Stanford, CA, (pp. 365-374). https://faculty.washington.edu/kstarbi/Starbird-et-al-ICWSM-2018-Echosystem-final.pdf

- Kate Starbird, D Dailey, O Mohamed, G Lee, ES Spiro. Engage Early, Correct More: How Journalists Participate in False Rumors Online during Crisis Events. CHI, 2018.

- P Krafft, K Zhou, I Edwards, K Starbird, ES Spiro. Centralized, Parallel, and Distributed Information Processing during Collective Sensemaking. CHI, 2017.

- J.D. West. (2017) How to fine-tune your BS meter. Seattle Times. Op-ed

- L. Kim, J.D. West, K. Stovel. (2017) Echo Chambers in Science? American Sociological Association (ASA) Annual Meeting, August 2017

- Kate Starbird. (2017). Examining the Alternative Media Ecosystem through the Production of Alternative Narratives of Mass Shooting Events on Twitter. In 11th International AAAI Conference on Web and Social Media (ICWSM 2017), Montreal, Canada, (pp. 230-339). http://faculty.washington.edu/kstarbi/Alt_Narratives_ICWSM17-CameraReady.pdf

- Ahmer Arif, John Robinson, Stephanie Stanek, Elodie Fichet, Paul Townsend, Zena Worku and Kate Starbird. (2017). A Closer Look at the Self-Correcting Crowd: Examining Corrections in Online Rumors. Proceedings of the ACM 2017 Conference on Computer-Supported Cooperative Work & Social Computing (CSCW ’17), Portland, Oregon, (pp. 155-168). http://faculty.washington.edu/kstarbi/Arif_Starbird_CorrectiveBehavior_CSCW2017.pdf

- Kate Starbird, Emma Spiro, Isabelle Edwards, Kaitlyn Zhou, Jim Maddock and Sindhu Narasimhan. (2016). Could This Be True? I Think So! Expressed Uncertainty in Online Rumoring. Proceedings of the ACM 2016 Conference on Human Factors in Computing Systems (CHI 2016), San Jose, CA. (pp, 360-371).

http://faculty.washington.edu/kstarbi/CHI2016_Uncertainty_Round2_FINAL-3.pdf - A Arif, K Shanahan, R Chou, S Dosouto, K Starbird, ES Spiro. How Information Snowballs: Exploring the Role of Exposure in Online Rumor Propagation. CSCW, 2016.

- L Zeng, K Starbird, ES Spiro. #Unconfirmed: Classifying Rumor Stance in Crisis-Related Social Media Messages. ICWSM, 2016.

- M. Rosvall, A.V. Esquivel, A. Lancichinetti, J.D. West, R. Lambiotte. (2014) Memory in network flows and its effects on spreading dynamics and community detection. Nature Communications. 5:4630, doi:10.1038/ncomms5630

- Kate Starbird, Jim Maddock, Mania Orand, Peg Achterman, and Robert M. Mason. (2014). Rumors, False Flags, and Digital Vigilantes: Misinformation on Twitter after the 2013 Boston Marathon Bombings. Short paper. iConference 2014, Berlin, Germany, (9 pages). http://faculty.washington.edu/kstarbi/Starbird_iConference2014-final.pdfJ.D. West, T.C. Bergstrom, C.T. Bergstrom. (2014). Cost-effectiveness of open access publications. Economic Inquiry. 52: 1315-1321. doi: 10.1111/ecin.12117

- ES Spiro, J Sutton, S Fitzhugh, M Greczek, N Pierski, CT Butts. Rumoring During Extreme Events: A Case Study of Deepwater Horizon 2010. WebSci, 2012.

- J.D. West, C.T Bergstrom (2011) Can Ignorance Promote Democracy? Science. 334(6062):1503-1504. doi:10.1126/science.1216124

OTHER PROJECTS

Tracking and Unpacking Rumor Permutations to Understand Collective Sensemaking Online

Emma S. Spiro (PI), Kate Starbird (Co-PI)

![]() This research addresses empirical and conceptual questions about online rumoring, asking: (1) How do online rumors permute, branch, and otherwise evolve over the course of their lifetime? (2) How can theories of rumor spread in offline settings be extended to online interaction, and what factors (technological and behavioral) influence these dynamics, perhaps making online settings distinct environments for information flow? The dynamics of information flow are particularly salient in the context of crisis response, where social media have become an integral part of both the formal and informal communication infrastructure. Improved understanding of online rumoring could inform communication and information-gathering strategies for crisis responders, journalists, and citizens affected by disasters, leading to innovative solutions for detecting, tracking, and responding to the spread of misinformation and malicious rumors. This project has the potential to fundamentally transform both methods and theories for studying collective behavior online during disasters. Techniques developed for tracking rumors as they evolve and spread over social media will aid other researchers in addressing similar problems in other contexts. Learn more

This research addresses empirical and conceptual questions about online rumoring, asking: (1) How do online rumors permute, branch, and otherwise evolve over the course of their lifetime? (2) How can theories of rumor spread in offline settings be extended to online interaction, and what factors (technological and behavioral) influence these dynamics, perhaps making online settings distinct environments for information flow? The dynamics of information flow are particularly salient in the context of crisis response, where social media have become an integral part of both the formal and informal communication infrastructure. Improved understanding of online rumoring could inform communication and information-gathering strategies for crisis responders, journalists, and citizens affected by disasters, leading to innovative solutions for detecting, tracking, and responding to the spread of misinformation and malicious rumors. This project has the potential to fundamentally transform both methods and theories for studying collective behavior online during disasters. Techniques developed for tracking rumors as they evolve and spread over social media will aid other researchers in addressing similar problems in other contexts. Learn more

Tracking and Unpacking Rumor Permutations to Understand Collective Sensemaking Online

Emma S. Spiro (PI), Kate Starbird (Co-PI)

![]() This research addresses empirical and conceptual questions about online rumoring, asking: (1) How do online rumors permute, branch, and otherwise evolve over the course of their lifetime? (2) How can theories of rumor spread in offline settings be extended to online interaction, and what factors (technological and behavioral) influence these dynamics, perhaps making online settings distinct environments for information flow? The dynamics of information flow are particularly salient in the context of crisis response, where social media have become an integral part of both the formal and informal communication infrastructure. Improved understanding of online rumoring could inform communication and information-gathering strategies for crisis responders, journalists, and citizens affected by disasters, leading to innovative solutions for detecting, tracking, and responding to the spread of misinformation and malicious rumors. This project has the potential to fundamentally transform both methods and theories for studying collective behavior online during disasters. Techniques developed for tracking rumors as they evolve and spread over social media will aid other researchers in addressing similar problems in other contexts. Learn more

This research addresses empirical and conceptual questions about online rumoring, asking: (1) How do online rumors permute, branch, and otherwise evolve over the course of their lifetime? (2) How can theories of rumor spread in offline settings be extended to online interaction, and what factors (technological and behavioral) influence these dynamics, perhaps making online settings distinct environments for information flow? The dynamics of information flow are particularly salient in the context of crisis response, where social media have become an integral part of both the formal and informal communication infrastructure. Improved understanding of online rumoring could inform communication and information-gathering strategies for crisis responders, journalists, and citizens affected by disasters, leading to innovative solutions for detecting, tracking, and responding to the spread of misinformation and malicious rumors. This project has the potential to fundamentally transform both methods and theories for studying collective behavior online during disasters. Techniques developed for tracking rumors as they evolve and spread over social media will aid other researchers in addressing similar problems in other contexts. Learn more

Understanding Online Audiences for Police on Social Media

Emma S. Spiro (PI)

A number of high-profile incidents have highlighted tensions between citizens and police, bringing issues of police-citizen trust and community policing to the forefront of the public’s attention. Efforts to mediate this tension emphasize the importance of promoting interaction and developing social relationships between citizens and police. This strategy – a critical component of community policing – may be employed in a variety of settings, including social media. While the use of social media as a community policing tool has gained attention from precincts and law enforcement oversight bodies, the ways in which police are expected to use social media to meet these goals remains an open question. This study seeks to explore how police are currently using social media as a community policing tool. It focuses on Twitter – a functionally flexible social media space – and considers whether and how law enforcement agencies are co-negotiating norms of engagement within this space, as well as how the public responds to the behavior of police accounts. Learn more

A number of high-profile incidents have highlighted tensions between citizens and police, bringing issues of police-citizen trust and community policing to the forefront of the public’s attention. Efforts to mediate this tension emphasize the importance of promoting interaction and developing social relationships between citizens and police. This strategy – a critical component of community policing – may be employed in a variety of settings, including social media. While the use of social media as a community policing tool has gained attention from precincts and law enforcement oversight bodies, the ways in which police are expected to use social media to meet these goals remains an open question. This study seeks to explore how police are currently using social media as a community policing tool. It focuses on Twitter – a functionally flexible social media space – and considers whether and how law enforcement agencies are co-negotiating norms of engagement within this space, as well as how the public responds to the behavior of police accounts. Learn more

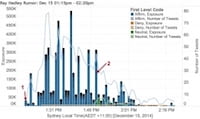

Mass Convergence of Attention During Crisis Events

Emma S. Spiro (PI)

When crises occur, including natural disasters, mass casualty events, and political/social protests, we observe drastic changes in social behavior. Local citizens, emergency responders and aid organizations flock to the physical location of the event. Global onlookers turn to communication and information exchange platforms to seek and disseminate event-related content. This social convergence behavior, long known to occur in offline settings in the wake of crisis events, is now mirrored – perhaps enhanced – in online settings. This project looks specifically at the mass convergence of public attention onto emergency responders during crisis events. Viewed through the framework of social network analysis, convergence of attention onto individual actors can be conceptualized in terms of network dynamics. This project employs a longitudinal study of social network structures in a prominent online social media platform to characterize instances of social convergence behavior and subsequent decay of social ties over time, across different actors types and different event types. Learn more

When crises occur, including natural disasters, mass casualty events, and political/social protests, we observe drastic changes in social behavior. Local citizens, emergency responders and aid organizations flock to the physical location of the event. Global onlookers turn to communication and information exchange platforms to seek and disseminate event-related content. This social convergence behavior, long known to occur in offline settings in the wake of crisis events, is now mirrored – perhaps enhanced – in online settings. This project looks specifically at the mass convergence of public attention onto emergency responders during crisis events. Viewed through the framework of social network analysis, convergence of attention onto individual actors can be conceptualized in terms of network dynamics. This project employs a longitudinal study of social network structures in a prominent online social media platform to characterize instances of social convergence behavior and subsequent decay of social ties over time, across different actors types and different event types. Learn more

Social Interaction and Peer Influence in Activity-Based Online Communities

Emma Spiro (Co-PI), Zack Almquist (Co-PI)

Individuals are influenced by their social networks. People adjust not only their opinions and attitudes, but also their behaviors based on both direct and indirect interaction with peers. Questions about social influence are particularly salient for activity-based behaviors; indeed much attention has been paid to promoting healthy habits through social interaction in online communities. A particularly interesting implication of peer influence in these settings is the potential for network-based interventions that utilize network processes to promote or contain certain behaviors or actions in a population; however, the first step toward designing such intervention strategies is to understand how, when, and to what extent social signals delivered via social interaction influence behavior. This project fills this gap by using digital traces of behaviors in online platforms to observe and understand how social networks and interactions are associated with behavior and behavior change. Learn more.

Individuals are influenced by their social networks. People adjust not only their opinions and attitudes, but also their behaviors based on both direct and indirect interaction with peers. Questions about social influence are particularly salient for activity-based behaviors; indeed much attention has been paid to promoting healthy habits through social interaction in online communities. A particularly interesting implication of peer influence in these settings is the potential for network-based interventions that utilize network processes to promote or contain certain behaviors or actions in a population; however, the first step toward designing such intervention strategies is to understand how, when, and to what extent social signals delivered via social interaction influence behavior. This project fills this gap by using digital traces of behaviors in online platforms to observe and understand how social networks and interactions are associated with behavior and behavior change. Learn more.

Detecting Misinformation Flows in Social Media Spaces During Crisis Events

Kate Starbird (PI), Emma Spiro (Co-PI), Robert Mason (Co-PI)

This research seeks both to understand the patterns and mechanisms of the diffusion of misinformation on social media and to develop algorithms to automatically detect misinformation as events unfold. During natural disasters and other hazard events, individuals increasingly utilize social media to disseminate, search for and curate event-related information. Eyewitness accounts of event impacts can now be shared by those on the scene in a matter of seconds. There is great potential for this information to be used by affected communities and emergency responders to enhance situational awareness and improve decision-making, facilitating response activities and potentially saving lives. Yet several challenges remain; one is the generation and propagation of misinformation. Indeed, during recent disaster events, including Hurricane Sandy and the Boston Marathon bombings, the spread of misinformation via social media was noted as a significant problem; evidence suggests it spread both within and across social media sites as well as into the broader information space.

This research seeks both to understand the patterns and mechanisms of the diffusion of misinformation on social media and to develop algorithms to automatically detect misinformation as events unfold. During natural disasters and other hazard events, individuals increasingly utilize social media to disseminate, search for and curate event-related information. Eyewitness accounts of event impacts can now be shared by those on the scene in a matter of seconds. There is great potential for this information to be used by affected communities and emergency responders to enhance situational awareness and improve decision-making, facilitating response activities and potentially saving lives. Yet several challenges remain; one is the generation and propagation of misinformation. Indeed, during recent disaster events, including Hurricane Sandy and the Boston Marathon bombings, the spread of misinformation via social media was noted as a significant problem; evidence suggests it spread both within and across social media sites as well as into the broader information space.

Taking a novel and transformative approach, this project aims to utilize the collective intelligence of the crowd – the crowdwork of some social media users who challenge and correct questionable information – to distinguish misinformation and aid in its detection. It will both characterize the dynamics of misinformation flow online during crisis events, and develop a machine learning strategy for automatically identifying misinformation by leveraging the collective intelligence of the crowd. The project focuses on identifying distinctive behavioral patterns of social media users in both spreading and challenging or correcting misinformation. It incorporates qualitative and quantitative methods, including manual and machine-based content analysis, to look comprehensively at the spread of misinformation. Learn more

Hazards, Emergency Response and Online Informal Communication

Emma S. Spiro (PI)

Project HEROIC is a collaborative, NSF funded effort with researchers at the University of Kentucky and the University of California, Irvine which strives to better understand the dynamics of informal online communication in response to extreme events.

Project HEROIC is a collaborative, NSF funded effort with researchers at the University of Kentucky and the University of California, Irvine which strives to better understand the dynamics of informal online communication in response to extreme events.

The nearly continuous, informal exchange of information — including such mundane activities as gossip, rumor, and casual conversation — is a characteristic human behavior, found across societies and throughout recorded history. While often taken for granted, these natural patterns of information exchange become an important “soft infrastructure” for decentralized resource mobilization and response during emergencies and other extreme events. Indeed, despite being historically limited by the constraints of physical proximity, small numbers of available contacts, and the frailties of human memory, informal communication channels are often the primary means by which time-sensitive hazard information first reaches members of the public. This capacity of informal communication has been further transformed by the widespread adoption of mobile devices (such as “smart-phones”) and social media technologies (e.g., microblogging services such as Twitter), which allow individuals to reach much larger numbers of contacts over greater distances than was possible in previous eras.

Although the potential to exploit this capacity for emergency warnings, alerts, and response is increasingly recognized by practitioners, much remains to be learned about the dynamics of informal online communication in emergencies — and, in particular, about the ways in which existing streams of information are modified by the introduction of emergency information from both official and unofficial sources. Our research addresses this gap, employing a longitudinal, multi-hazard, multi-event study of online communication to model the dynamics of informal information exchange in and immediately following emergency situations. Learn more

Eigenfactor Project

Jevin D. West, Carl Bergstrom

The aim of the Eigenfactor Project is to develop methods, algorithms, and visualizations for mapping the structure of science. We use these maps to identify (1) disciplines and emerging areas of science, (2) key authors, papers and venues and (3) communication patterns such as differences in gender bias. We also use these maps to study scholarly publishing models and build recommendation engines and search interfaces for improving how scholars access and navigate the literature. Learn more

The aim of the Eigenfactor Project is to develop methods, algorithms, and visualizations for mapping the structure of science. We use these maps to identify (1) disciplines and emerging areas of science, (2) key authors, papers and venues and (3) communication patterns such as differences in gender bias. We also use these maps to study scholarly publishing models and build recommendation engines and search interfaces for improving how scholars access and navigate the literature. Learn more

Open Access Project

Jevin D. West, Carl Bergstrom

The open access movement has made great strides. There has been a significant increase in Open Access journals over the last ten years and many large foundations now require OA. Unfortunately, during the same time, there has been a signficant increase in exploitative, predatory publishers, which charge authors to publish with little or no peer review, editorial services or authentic certification. We are developing a cost effectiveness tool that will create an open journal market of prices and influence scores where these kinds of journals can be objectively identified [2]. Learn more

The open access movement has made great strides. There has been a significant increase in Open Access journals over the last ten years and many large foundations now require OA. Unfortunately, during the same time, there has been a signficant increase in exploitative, predatory publishers, which charge authors to publish with little or no peer review, editorial services or authentic certification. We are developing a cost effectiveness tool that will create an open journal market of prices and influence scores where these kinds of journals can be objectively identified [2]. Learn more

Cross-platform analysis of the information operation targeting the White Helmets in Syria

Kate Starbird (PI)

Ph.D. Student Lead: Tom Wilson

While there is increasing awareness and research about online information operations—efforts by state and non-state actors to manipulate public opinion through methods such as the coordinated dissemination of disinformation, amplification of specific accounts or messages, and targeted messaging by agents who impersonate online political activists—there is still a lot we do not understand, including how these operations take place across social media platforms. In this research we are investigating cross-platform collaboration and content amplification that can work to propagate disinformation, specifically as part of an influence campaign targeting the White Helmets, a volunteer response organization that operates in Syria.

While there is increasing awareness and research about online information operations—efforts by state and non-state actors to manipulate public opinion through methods such as the coordinated dissemination of disinformation, amplification of specific accounts or messages, and targeted messaging by agents who impersonate online political activists—there is still a lot we do not understand, including how these operations take place across social media platforms. In this research we are investigating cross-platform collaboration and content amplification that can work to propagate disinformation, specifically as part of an influence campaign targeting the White Helmets, a volunteer response organization that operates in Syria.

Through a mixed-method approach that uses ‘seed’ data from Twitter, we have examined the role of YouTube in the influence campaign against the White Helmets. Preliminary findings suggest that on Twitter the accounts working to discredit and undermine the White Helmets are far more active and prolific than those that support the group. Furthermore, this cluster of anti-WH accounts on Twitter uses YouTube as a resource—leveraging the specific affordances of the different platforms in complementary ways to achieve their objectives. Learn more

Using Facebook engagements to assess how information operations micro-target online audiences using “alternative” new media

Kate Starbird (PI)

Ph.D. Student Lead: Tom Wilson

There is a pressing need to understand how social media platforms are being leveraged to conduct information operations. In addition to being deployed to influence democratic processes, information operations are also utilized to complement kinetic warfare on a digital battlefield. In prior work we have developed a deep understanding of the Twitter-based information operations conducted in the context of the civil war in Syria. Extending upon this work, this project examines how Facebook is leveraged within information operations, and how a subsection of the “alternative” media ecosystem is integrated into those operations. We aim to understand the structure and dynamics of the media ecosystem that is utilized by information operations to manipulate public opinion, and more about the audiences that engage with related content from these domains. Our research will provide insight into how Facebook features into a persistent, multi-platform information operations campaign. Complementing previous research, it will provide insight into how a subsection of the alternative media ecosystem is leveraged by political entities to micro-target participants in specific online communities with strategic messaging and disinformation.

Unraveling Online Disinformation Trajectories: Applying and Translating a Mixed-Method Approach to Identify, Understand and Communicate Information Provenance

Kate Starbird (PI)

This project will improve our understanding of the spread of disinformation in online environments. It will contribute to the field of human-computer interaction in the areas of social computing, crisis informatics, and human centered data science. Conceptually, it explores relationships between technology, structure, and human action – applying the lens of structuration theory towards understanding how technological affordances shape online action, how online actions shape the underlying structure of the information space, and how those integrated structures shape information trajectories. Methodologically, it enables further development, articulation and evaluation of an iterative, mixed method approach for interpretative analysis of “big” social data. Finally, it aims to leverage these empirical, conceptual and methodological contributions towards the development of innovative solutions for tracking disinformation trajectories.

This project will improve our understanding of the spread of disinformation in online environments. It will contribute to the field of human-computer interaction in the areas of social computing, crisis informatics, and human centered data science. Conceptually, it explores relationships between technology, structure, and human action – applying the lens of structuration theory towards understanding how technological affordances shape online action, how online actions shape the underlying structure of the information space, and how those integrated structures shape information trajectories. Methodologically, it enables further development, articulation and evaluation of an iterative, mixed method approach for interpretative analysis of “big” social data. Finally, it aims to leverage these empirical, conceptual and methodological contributions towards the development of innovative solutions for tracking disinformation trajectories.

The online spread of disinformation is a societal problem at the intersection of online systems and human behavior. This research program aims to enhance our understanding of how and why disinformation spreads and to develop tools and methods that people, including humanitarian responders and everyday analysts, can use to detect, understand, and communicate its spread. The research has three specific, interrelated objectives: (1) to better understand the generation, evolution, and propagation of disinformation; (2) to extend, support, and articulate an evolving methodological approach for analyzing “big” social media data for use in identifying and communicating “information provenance” related to disinformation flows; (3) to adapt and transfer the tools and methods of this approach for use by diverse users for identification of disinformation and communication of its origins and trajectories. More broadly, it will contribute to the advancement of science through enhanced understandings and conceptualization of the relationships between technological affordances, social network structure, human behavior, and intentional strategies of deception. The program includes an education plan that supports PhD student training and recruits diverse undergraduate students into research through multiple mechanisms, including for-credit research groups and an academic bridge program. Learn more

Community Labs in Public Libraries

Rolf Hapel, Chris Coward, Chris Jowaisas, Jason Young

Given their historic role of curating information, libraries have the potential to be key players in combating misinformation, political bias, and other threats to democracy. This research project seeks to support public libraries in their efforts to address misinformation by developing library-based community labs: spaces where patrons can collectively explore pressing social issues. Community labs have become popular across many European countries, where they are used to instigate democratic debate, provide discussion spaces and programming, alleviate community tensions, and promote citizenship and mutual understanding. This project aims to adapt a community lab model for use by public libraries in Washington. Learn more

Community Labs in Public Libraries

Rolf Hapel, Chris Coward, Chris Jowaisas, Jason Young

Given their historic role of curating information, libraries have the potential to be key players in combating misinformation, political bias, and other threats to democracy. This research project seeks to support public libraries in their efforts to address misinformation by developing library-based community labs: spaces where patrons can collectively explore pressing social issues. Community labs have become popular across many European countries, where they are used to instigate democratic debate, provide discussion spaces and programming, alleviate community tensions, and promote citizenship and mutual understanding. This project aims to adapt a community lab model for use by public libraries in Washington. Learn more

FEATURED PROJECTS

Misinformation media literacy: Supporting libraries as hubs for misinformation education

in Fall 2023, CIP researchers started work on a three-year project, supported by $750,000 in funding from the Institute of Museum and Library Services, that will create a comprehensive, nationwide information literacy program that aims to increase capacity for library staff and community members to address and navigate problematic information in their local communities. CIP researchers have partnered with instructional designers at WebJunction, a free online learning platform for library staff, that’s part of OCLC Research. They will also collaborate with libraries across the U.S. to implement educational programs modeled on successful programs the CIP has supported that have reached thousands of Washington state students, teachers, librarians and other educators in recent years.

***

Researching the changing role of public trust and misinformation in local communities in Whatcom County, Washington

CIP postdoctoral scholar Rachel Moran-Prestridge received funding from the CIP’s Innovation Fund for a project in Whatcom County, Washington that explored the changing role of public trust and the spread of misinformation. The CIP teamed up with nonprofit library organization OCLC and nonprofit, community-based newsroom Salish Current to explore how library professionals, educators and local journalists in Whatcom County build and sustain trust in their work. The research team held a series of in-person workshops on issues of trust and misinformation that brought together individuals from across local knowledge-building institutions to talk about the challenges of building trust in an era of heightened misinformation and political polarization.

- Learn more about this project

- July 2023 | In an opinion article in The Seattle Times, “Local information providers can cultivate curiosity to build community trust,” the CIP’s Rachel Moran-Prestridge shared insights from this research.

***

Misinformation Escape Room

Media and information literacy underpins the vast majority of educational programs aimed at supporting individuals to become critical information consumers and producers. Most models feature a linear pathway that goes through the steps of: defining one’s information question, finding a number of sources through search and other strategies, evaluating the sources for credibility, and using the information to solve the individual’s question. With the rise of misinformation and how it flows through social media, this model is being questioned. Perhaps the most powerful critique is the lack of attention on the emotional and psychological, or affective dimensions of misinformation that makes it so potent and pernicious.

The misinformation escape room project, co-led by CIP co-founder Chris Coward and CIP faculty member Jin Ha Lee, contributes to a growing number of game-based and experiential approaches to learning about developing resilience to misinformation. The project seeks to move beyond the rational and cognitivist approach to learning about misinformation by situating the problem as being connected to emotion and social interactions. The project’s first escape room, The Euphorigen Investigation — available at Loki’s Loop — draws on research on misinformation, mixed reality games, digital youth and media, and information literacy.

***

Sending the News Back Home: Analyzing the Spread of Misinformation Between Vietnam and Diasporic Communities in the 2020 Election

This project hopes to better understand how misinformation about the 2020 U.S. elections proliferated through social media and how it spreads across Vietnamese diasporic communities in the U.S. and between the U.S. and Vietnam. This project, led by Center for an Informed Public postdoctoral fellow Rachel E. Moran and UW Information School PhD student Sarah Nguyễn and supported with funding from George Washington University’s Institute for Data, Democracy & Politics (IDDP), is informed by the work of organizations such as VietFactCheck, The Interpreter, and other community organizations working to provide fact-checking and media analysis in Vietnamese and English.

- JANUARY 2021 | Learn more about Sending News Back Home

- JUNE 2021 | Early findings from explorations into the Vietnamese misinformation crisis” (English | Tiếng Việt)

***

COVID-19 Rapid-Response Research

Since the start of the COVID-19 pandemic, researchers at the Center for an Informed Public have been engaged in work to better understand how scientific knowledge, expertise, data and communication affect the spread and correction of online misinformation about an emerging pandemic. In May 2020, the National Science Foundation awarded approximately $200,000 in funding through the COVID-19 Rapid Response Research (RAPID) program for a project led by CIP principal investigators and co-founders Emma Spiro, an associate professor at the UW Information School, Kate Starbird, an associate professor in UW’s Department of Human Centered Design & Engineering, and Jevin West, an associate professor in the Information School. Their research looks at how a crisis situation like the COVID-19 pandemic can make the collective sensemaking process more vulnerable to misinformation.

In April 2020, the University of Washington’s Population Health Initiative awarded approximately $20,000 in COVID-19 rapid-response research funding for a proposal, also led by Spiro, Starbird and West, that seeks to understand online discourse during the ongoing pandemic and come up with strategies for improving collective action and sensemaking within science and society.

***

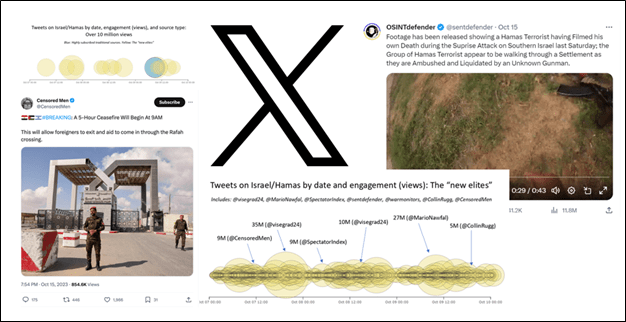

The ‘new elites’ of X: Identifying the most influential accounts engaged in Hamas/Israel discourse

RAPID RESEARCH REPORT | Through a novel data collection process, a team of CIP researchers identified highly influential accounts in Israel-Hamas war discourse on X that comprise the most dominant English-language news sources on Twitter for the event. In an October 20, 2023, CIP rapid research report that focuses on the first three days of the conflict, Mike Caulfield, Mert Can Bayar and Ashlyn B. Aske compare these accounts to traditional news sources and find on average they have far fewer subscribers while achieving far greater views, are of more recent popularity, and show a greater posting frequency.

- Read the CIP’s October 20, 2023, rapid research report.

- The CIP’s report was cited by NBC News, The New York Times, The Washington Post, The Atlantic, WNYC Public Radio’s “On the Media” and Cyberscoop.

- In a November 7, 2023, article for Nature, “The new Twitter is changing rapidly — study it before it’s too late,” Mike Caulfield observes: “In my more than ten years in this field, I’ve never seen an almost entirely new set of accounts come to dominate a major platform in less than a year.”

***

YouTube search surfaces good information about the causes of the Lahaina wildfire on Maui, but external links reveal a different world

RAPID RESEARCH REPORT | In August 2023, CIP research scientist Mike Caulfield and director Kate Starbird co-authored a rapid research report examining a variety of “planned crisis event” narratives on X, formerly known as Twitter, that shared unfounded claims that the destructive and deadly Lahaina, Maui wildfire earlier that month was part of a secret plan. At the same time, they found that YouTube’s search interface surfaced reliable information about the wildfire’s causes, even when prompted with a search targeted at bringing up coverage of non-existent deliberate causes.

***

Examining the Role of Public Libraries in Combating Misinformation

Given their role in curating information and offering spaces where community members can collectively explore social issues, libraries are uniquely positioned ot be key players in combating misinformation, political polarization, and other threats to democracy, particularly at the community level. But, while libraries have this potential, few are recognized for effectively fulfilling this role. This research project, led by UW Information School senior research scientist and CIP research fellow Jason C. Young, seeks to understand why.

What are the experiences and challenges of librarians in their professional interactions helping people navigate problematic information? What types of issues do patrons raise? What assistance or programs have librarians provided? Have they been effective? Why or why not? What kinds of interventions could better support their efforts?

The aim of this project is to chat an applied research agenda for researchers and librarians to co-create new tools, resources, programs and services that strengthen the library’s role in addressing misinformation.

***

Election Integrity Partnership

In July 2020, the Center for an Informed Public joined with three other partners, Stanford Internet Observatory, Graphika and the Atlantic Council’s DFRLab, to form the Election Integrity Partnership, a nonpartisan dis- and misinformation research consortium, which worked to detect and mitigate the impact of attempts to prevent or deter people from voting in the 2020 U.S. elections or to delegitimize election results. From September to November, a team of approximately 20 CIP-affiliated principal investigators, postdoctoral fellows, PhD students, graduate students and undergraduate research assistants worked as part of the Election Integrity Partnership’s monitoring and analysis teams that tracked voting-related dis- and misinformation and contributed to numerous rapid-response research blog posts and policy analyses before, during and following election day. That included research into the ways false claims involving mail- and ballot-dumping incidents helped shape social media narratives around the U.S. elections; a “What to Expect” analysis of the types of voting-related dis- and misinformation researchers anticipated to see in days leading up Nov. 3, 2020, on election day and in the days and weeks that followed; and an analysis of domestic verified Twitter accounts that consistently amplified misinformation about the integrity of the U.S. elections. The Election Integrity Partnership’s final report, “The Long Fuse: Misinformation and the 2020 Election,” was released in March 2021. Craig Newmark Philanthropies and Omidyar Network contributed funding to support the CIP’s Election Integrity Partnership research contributions.

***