A rapidly unfolding conflict like Russia’s invasion of Ukraine, like so many other crisis events, comes with considerable uncertainty. Rumors and misinformation, which feed off the “fog of war,” thrive on uncertainty.

On top of that, considering the history of this conflict and the countries involved — and in particular the “active measures” playbook of Russia — we can expect an effort to manipulate the “collective sensemaking” process for political purposes.

That includes strategically shaping the information space to Russia’s benefit — including using grey propaganda websites, inauthentic social media accounts, and “unwitting agents” to spread disinformation while confusing, deflecting, distracting, and demoralizing information consumers.

In a tweet thread published late Wednesday, Center for an Informed Public faculty director Kate Starbird, a UW Human Centered Design & Engineering associate professor, offered up some important observations about how to deal with the “fog of war” and how to avoid contributing to the uncertainty and confusion in online spaces.

Scroll down for timely and important insights about the current crisis from CIP researchers, including Starbird, Scott Radnitz, Mike Caulfield, and Jevin West.

***

Amid a ‘Fog of War,’ It’s Important to Slow Down

Starbird’s Feb. 23 tweet thread has been republished below and has been edited for clarity.

- As we turn to social media for information, let’s be extra careful that we don’t become unwitting agents in the spread of disinformation. Go slow. Vet your sources.

- Be wary of unfamiliar accounts. Check their profile. Are they brand new? Or have a low number of followers? What were they tweeting a couple of weeks or months ago? Make sure they are who they say they are. If you’re not sure, it’s okay to not retweet.

- Don’t necessarily trust your in-network amplifiers. Other folks are moving fast and maybe not vetting so well. Mistakes happen. Don’t let their mistake be your mistake and cascade through your network.

- Images can be taken out of context. Try to do an image search to see if it’s been posted before — and is being purposefully spread out of context here. (Wish @Twitter had a right-click to check the provenance of images… maybe a future product?)

- Russia is physically attacking Ukraine, but they’re hinting towards other kinds of retribution for countries like ours invoking sanctions. Expect those to have hybrid elements — informational attacks, hacking.

- It’s likely that they’ve already laid the groundwork for information operations — that they’ve already infiltrated some of our trusted social networks. If an old Twitter friend starts acting strangely, make a note, and double check that they are who you think they are.

- Check in with your own mental health. Real-time participation in real-world crises/conflict can take a heavy toll. We want to be informed and we don’t want to look away while people are suffering. But do take breaks. Avoid images and videos as much as you can. Talk to people.

- You’re here, but I bet some of your kids are on TikTok. Borrow their feed for a bit to see what they’re seeing. In addition to potentially violent content, check for propaganda. Russia’s network of grey propaganda creators know how to package content up for TikTok and other formats.

- It’s going to be tempting to try to score some domestic political points. Resist that urge. There will be time for that later. (We’re especially vulnerable to spreading propaganda/disinformation when we’re engaging from within our partisan political identities.)

- “Fog of war” conditions mean that our understanding of what’s happening is going to change over time as we gain more information. Media and political leaders may have to correct themselves. That doesn’t mean that they’re lying. That’s how information works in crisis contexts.

- Take responsibility. If you do make a mistake, make sure to correct yourself. Don’t just delete. If it’s a Facebook post, update it with correct info. If it’s a tweet, delete it if it’s still spreading. Then post a clear correction. (Wish we had a retraction button @Twitter.)

- Correcting others can be useful. If it’s someone you care about, do it quietly (a DM). If you don’t know the person well, post a public correction, but recognize you probably won’t change their mind. You may, however, help their audience.

- Tune out places where people aren’t trying to inform, but are purposefully building (rather than trying to reduce) uncertainty, muddying the waters. Mute those voices for awhile. At best, they’re unhelpful. At worst, adversarial.

In follow-up tweets, Starbird cited additional insights from Jane Lytvynenko, a Shorenstein Center senior research fellow, about the cybersecurity hygiene needs for newsroom personnel covering the new war in Ukraine; and BBC News disinformation reporter Shayan Sardarizadeh regarding how old or false video footage can go viral during crisis events.

***

Understanding Motivations

CIP faculty member Scott Radnitz, a UW Jackson School of International Studies associate professor who serves as the director of the Ellison Center for Russian, East European and Central Asian Studies shares these additional insights:

- Because Russia’s rationale for invading involves “rescuing” civilians from a supposedly aggressive Ukrainian government, be wary of gruesome and violent images intended to evoke an emotional response, but might not be what they appear at first glance.

- Countries involved in conflict always seek to shape certain narratives in their favor. Do not automatically accept statements or images from official spokespeople or accounts from government sources. Wait for independent verification.

- Be sensitive to the language you use when talking, tweeting, blogging and sharing other content about the conflict online. Words we use during crisis events can influence how we think. Russian President Vladimir Putin announced the invasion not as the start of a war, but as a “special military operation” to “demilitarize and denazify” Ukraine, all classic Orwellian doublespeak. The best way to contribute constructively to public debates is to call things what they are using clear language.

Radnitz also discussed the politics of conspiracy in Russia in this December Coda Story Q&A and explored false flag attacks in today’s information environment in a recent article in The Conversation.

***

Using the SIFT Method in the Context of the Crisis in Ukraine

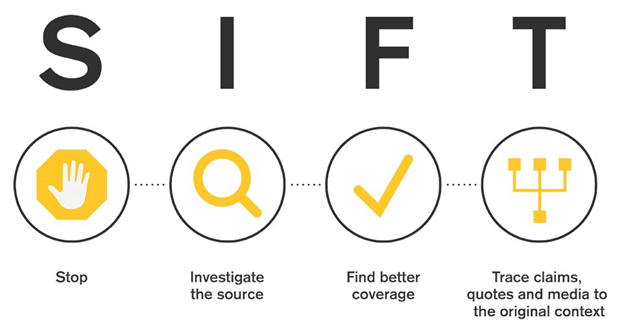

Mike Caulfield, a CIP research scientist who developed the SIFT method for contextualizing claims online, also published a tweet thread on Thursday detailing how to use SIFT — Stop, Investigate the source, Find better coverage; and Trace claims, quotes and media to the original context – in the context of the current crisis in Ukraine.

***

Our Actions Online Can Have Major Impacts

CIP founding director Jevin West, a UW Information School associate professor, notes that we’re no longer simply information consumers. West explains:

- As soon as we start to share and like content, we become editors. As editors we curate content not just for ourselves and our friends, but also the algorithms that feed content to millions of others social media users. As an editor, we need to be extra careful during crisis events. We need to think more and share less.

- During crisis events like the current armed conflict in Ukraine, our appetites for information are high at a time when quality information is relatively low. We need to be extra careful in what we share and in what sources we rely on. The tips and insights laid out above by Kate Starbird, Scott Radnitz, and Mike Caulfield can help in vetting those sources.

TOP IMAGE: People gather on the University of Washington’s Red Square waving Ukrainian flags on Thursday, February 24, 2022. (Photo by UW News)